Yet Another Kubernetes Intro - Part 1 - What is it?

I’ve spent quite a bit of time trying to learn and understand Kubernetes. And in doing so, I have used numerous different resources on the interwebs to try and make sense of everything. However, no single resource could tell me all the things I wanted/needed to know in a useful way. Instead I ended up Googling quite a bit.

Because of this, I’m going to try and write my own introduction to Kubernetes. Yes, another introduction… However, this will be an introduction done in the way that makes sense to me. The way that I would have wanted it explained to me when I got started. It might not work for everyone. Hell, it might not even work for anyone but me. But this is just as much for me, as it is for anyone who wants to read yet another “getting started with Kubernetes”.

What is Kubernetes?

I guess the first thing to answer is “what is Kubernetes”? What need does it fulfill? Why is it interesting?

I will assume that you already know Docker, or at least know of Docker and containers. The reason for me bringing this up, is of course that Docker is the foundation for Kubernetes! Or rather, containers are the foundation of Kubernetes. Docker just happens to be the default container engine for Kubernetes at the moment. It is possible to use other engines, but most people seem to use Docker…

Docker/containers give us a great way to bundle our applications, including the environments they need to be able to run, into a unit that we can deploy/host on any server has a container engine installed. But the problem is that being able to start and run a container is often only a part of being able run an application in a successful way in a production use case.

The Docker container engine is pretty basic in regards to actually running production workloads. It supports creating container, setting resource limitations, and has the ability to restart containers if they die. But this isn’t really enough in a lot of cases. The main reason being that we often (maybe even most of the time) want to have a load balanced environment with multiple instances of containers running on multiple nodes. This is outside of the scope of Docker, and requires us to do a lot of manual work besides setting up the multiple nodes. Mainly it forces us to configure a load balancer and make sure that we deploy our containers in the correct way to the correct nodes in the cluster. Not to mention that we have to figure out how to communicate between the containers in the nodes, and how to do updates to the containers when that is needed. If you want a more complex, load balanced, resilient solution without a ton of manual work, you need to look somewhere else. And that somewhere else could be Kubernetes.

Note: I want to mention that Kubernetes is just one option. There are many more, but currently it seems as though Kubernetes is the preferred one…

Kubernetes is what is called a container orchestrator, and it “orchestrates” containers in a cluster of machines. And what does that mean? Well, it means that you have a couple of server that should run your application. Kubernetes then takes the role of managing the containers that run on those machines for you. This is called “orchestration”. Basically, you tell Kubernetes that you want to run X instances of your container, and Kubernetes figures out what machines have capacity to do so, and tells them to run those containers.

However, it is a bit more advanced than that… You are not really telling Kubernetes to start X number of instances. You are actually telling it the desired state of your solution. You are telling it that you want the solution to always have X number of instances of you container running, no matter what.

So what is the difference between telling it to start X containers, and telling it that the desired state is to have X containers running? Well… The first time you set the desired state, the result is pretty much the same. Your defined number of containers are started on some nodes in the cluster. However, as soon as that has been done, the functionality is different. WHen talking about desired state, Kubernetes take over, and constantly look at the current state of the solution and make sure that X number of containers are running. If a container dies, Kubernetes notices this and schedules the deployment of another one on a node with available resources. If a whole node (server) should die, or get disconnected, Kubernetes will find out, and schedule the deployment of the lost containers on other servers to compensate for the loss of containers. If the server should come back online again, Kubernetes notices this as well, and re-distributes containers to make the best use of the resources in the cluster. It will even go as far as to kill any containers that you might spin up manually if they conflict with the desired state. The only goal is to make sure that there are the desired number of containers running. No matter how they were created, or for what reason.

So Kubernetes acts a lot like your own personal butler in the datacenter. You tell it what you want your solution to look like from a deployment point of view, and it makes sure that the state of the solution is kept as you want it.

On top of this, Kubernetes supports a ton of features that can help developers keep their solution running smoothly. Things like managing configuration and secrets (credentials etc), load balancing between containers, service location and more. But it is also a pretty complicated piece of software, which is why I will spend a few posts walking through some of the useful features that you probably want to use! Actually, it is not a complicated piece of software. It is a collection of software pieces that make it all run… And each piece is actually not that complicated. However, it solves some potentially complicated problems.

The very high level view of the involved parts

At the VERY high level, Kubernetes consists of 2 types of machines. First, you have the master(s), which makes up the cluster control plane. This is the part of the system that stores the desired state so that the cluster can be kept in the correct state. It implements a REST API that we can use to configure the desired state that we are looking for.

Secondly, you have the worker nodes. These machines do the actual work. “Dumb” workers that run the workload without complaining. They were even called minions in previous versions.

On top of that, you also have a client application called kubectl (pronounced cube control or kube cuttle (or my personal favorite cubby cuddle)). It is a command line tool for configuring Kubernetes. It helps you to send commands to the control plane of your cluster, as well as do some cool things like port-forwarding into the cluster.

Most of the commands that you issue using kubectl are pretty much just converted into the required HTTP calls to the Kubernetes REST API. However, doing this manually is a bit of a pain due to things like security and well…HTTP…so kubectl is the defacto way to do it.

The very basic overview of how it actually works

This is actually one of the parts that I wish somebody would have told me very early on. It might not be really important to make it possible to use Kubernetes, but being a developer, I like to have at least a basic understanding of what happens underneath the hood…

When you tell Kubernetes that you want a container to run in the cluster (actually you don’t really tell it to run a container, but I will get back to that in a few minutes) for you, this is what is actually happening. At a high level…

To start everything off, you make an HTTP call to the control plane. This HTTP POST contains your desired state in the form of a JSON blob (generally generated from a YAML (or JSON) file, but YAML is the most used form). This desired state is then put into a back-end store called etcd, which is just a key-value store. That’s it… That’s all that happens…

Then there is another process, or “controller”, that continuously asks the API for changes to the desired state. As you just updated the desired state, it figures out what containers (or other resources) that are currently missing in the cluster, and schedules any needed changes. In the case of having to run a new container for example, it will look at the available nodes, find one that has enough resources available to run the container, and updates the cluster state to reflect what nodes should be responsible for running the new workload.

On the worker nodes, there is another set of processes running. One of these is responsible for keeping track of what containers should be running on the current node. As you update the cluster state to include containers assigned to a node, it will pick up this change through the API and start the missing containers. And once the new container(s) are up and running, it will start monitoring them to make sure that there is always the desired set of containers running on the node.

That’s it! You send your desired state to the API. The API puts the configuration in etcd. A controller notices the change, and updates the config to include information about what node should run the workload. A controller on the selected node notices the change and starts up the container. And Bob’s your uncle!

The control plane and worker nodes then continuously monitors the desired state and the cluster to see if anything changes. If it does, it compares the new state with the desired state, and makes sure to make any required changes to keep the cluster running at the state that it should be running.

So this all might seem pretty obvious when you read it, but what was missing to me, was the fact that the API only puts state documents in a key-value store. The API actually doesn’t start any containers, or do anything else. It is only responsible for adding/updating/deleting the desired state in the store. Then other parts of the system monitor the store to figure out what needs to be done. In some cases that means scheduling containers to be run. And in some cases it might be something completely different, like setting up network routing etc.

However, not all resources that you add to the cluster ends up being a “thing” running in the cluster! Take for example the resource type called service (I will talk more about them later don’t worry). A service is a standardized way to find containers in a cluster. Conceptually, they are a form of entity with a fixed IP addresses that you can make calls against to reach any of a set of containers. In my mind, creating one of these would cause Kubernetes to spin up some process that proxied requests to services. However, they are not really a “thing” in that way. Instead, when we add service configurations to our cluster, they just end up as a document in etcd, just like everything else. However, they do not cause Kubernetes to schedule the creation of some process like containers do. Instead, the configuration is used by a process called kube-proxy that runs on the nodes. The kube-proxy can handle the service configuration in a couple of ways, but the most common one is that it sets up ip tables on the node to redirect all calls to the correct container. Continuously monitoring the cluster state for changes, and continuously updating the ip tables.

As I said, there will be more about Kubernetes services later, but I wanted to just have an example to show that adding a resource in Kubernetes doesn’t necessarily mean that some form of process is spun up in the cluster. This is a VERY important thing to understand. Without a piece of software that listens for changes in the state configuration, Kubernetes would pretty much just be a REST API to enable CRUD operations to be performed on a key-value store.

It is also important to understand the decoupled nature of Kubernetes. The API just adding things to a store. A separate piece of software acts on this change, potentially making changes to the state, causing additional processes to pick up these changes, and acting on them. This is creates a nicely decoupled and very powerful system. Decoupling things in this way is common in Kubernetes, as you will probably see. It favors watching state over owning entities.

Setting up Kubernetes on your machine

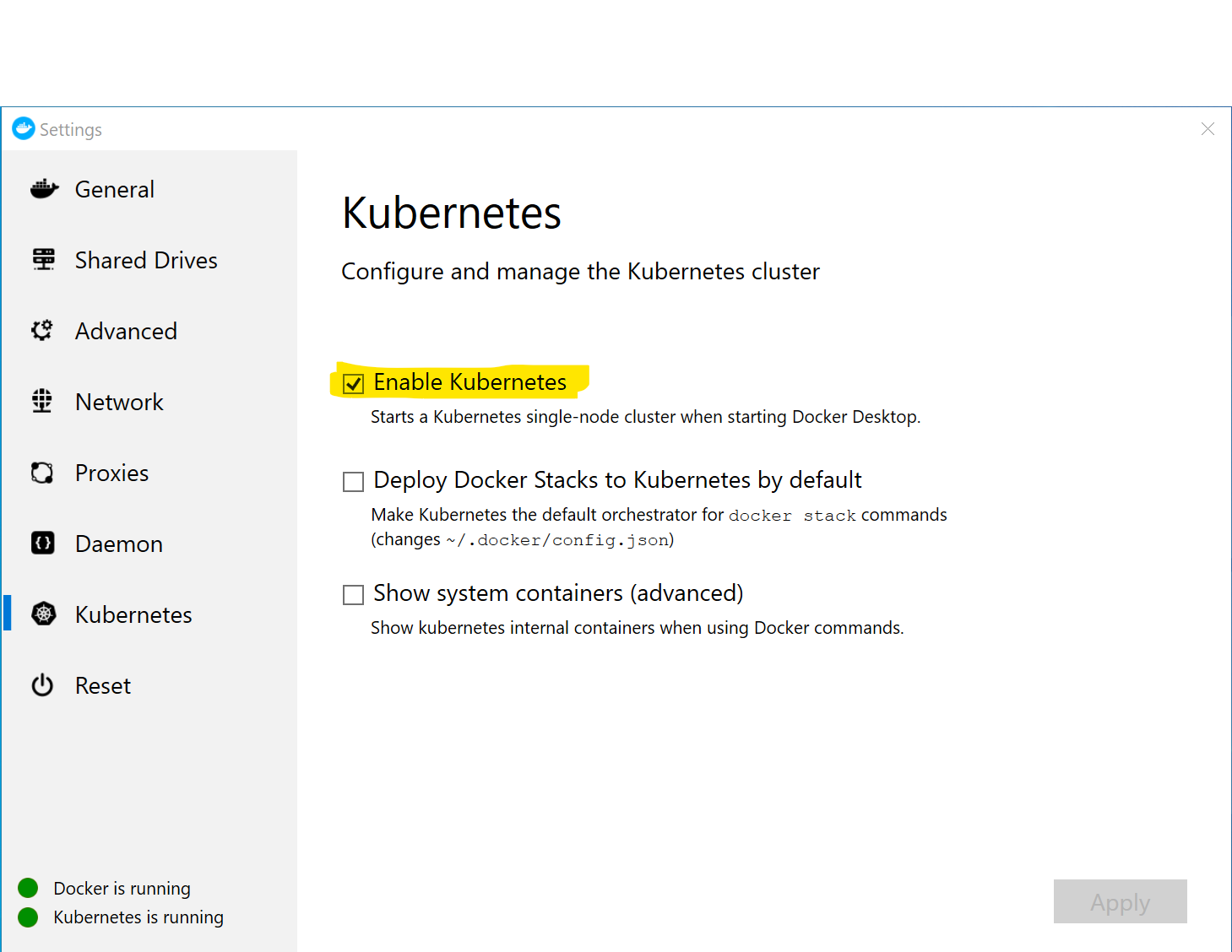

The easiest way to get started with Kubernetes is actually to just use the built-in capabilities in Docker Desktop. Once you have installed Docker Desktop, you can just open the settings, go to “Kubernetes”, tick the “Enable Kubernetes” checkbox and click “Apply”.

This will download Kubernetes and set it up inside the Docker virtual machine that you are running as part of Docker Desktop. That’s it! You are now ready to get started with Kubernetes on your dev box.

The really meta part about this, is that Kubernetes runs as containers inside the same Docker environment that it is controlling… Oh… Ehh… Cool!

Note: It’s worth mentioning that the Kubernetes installation that you get on your local box is just a single machine (for somewhat obvious reasons). This means that all the different components involved in running a Kubernetes cluster is running on the same machine. So there is no separate master/control plane and worker node(s). Which is fine for a dev scenario in most cases… But it is definitely not recommended for a production scenario!

The first deployment

Let’s go ahead and deploy a container to our Kubernetes cluster. However, before we can start deploying containers to the Kubernetes cluster, we need to understand one thing that I hinted at before. You do not tell Kubernetes to run containers. Instead, you create something called a Pod.

The Pod is the smallest deployable unit in Kubernetes. It is a unit that contains one or more containers, and that is scaled together as an single entity.

So, what does this mean, and why are we not able to deploy just a single container? Well, often there is a need to deploy, and scale, multiple containers as a unit, which is what the concept of a pod enables. And as it is perfectly valid to have a pod with a single container, having the pod as the smallest part is not really hindering you in any way, while still allowing you to have more complex functionality.

Why would you deploy and scale more than one container at the time? Well, sometimes you have containers that work in tandem. An example is a log aggregating container that comes coupled with the container that generates the logs. They are deployed together, and also share the same “environment”. However, make sure that you think about the scale part as well! It might seem like a smart idea to for example combine your web application and the database it uses into a single pod. But when you start scaling out, having multiple of these pods running, you end up with multiple databases as well. So combining them into a single pod would probably not be a good idea.

Containers in a pod share one IP address, so they have to be on separate ports if they expose ports. They can also share storage, and do perform inter process communication. So you can think of a pod as a container with multiple processes running inside it.

Comment: Combine containers in pods when they need to work together, and scale together. If they have different scaling requirements, or are not really tightly coupled, keep them as separate pods. There is nothing wrong with running a pod with a single container!

Ok, with that out of the way, let’s create a pod and run it in the Kubernetes cluster!

The first thing we need to do, is to create a config file that defines our required state. In this case, this means creating a config file that defines a pod containing a single container based off of the image of our choice. So how do we do this? Well, the easiest way is to create a file called something like helloworld-pod.yml, and add the following

apiVersion: v1

kind: Pod

metadata:

name: hello-world

spec:

containers:

- name: helloworld

image: zerokoll/helloworld

This configuration file says the following:

- We want to use version 1 of the Kubernetes API

- We want to create a Pod

- The pod should be named “hello-world”

- The pod should contain a single container called helloworld, based on the image zerokoll/helloworld

Note: The zerokoll/helloworld is pointing towards a VERY basic image in my Docker Hub registry. It is a simple ASP.NET Core application that says “Hello World” when you browse to it… Yay! That is REALLY useful bro! ;)

Next, we need to add this config to our Kubernetes cluster. To do this, you execute the following

kubectl create -f ./helloworld-pod.yml

The response back from this call is just a simple pod/hello-world created that tells you that the resource has been created.

If you want to verify that it has indeed been created, you can run

kubectl get pods

This should return a list of pods running in the default namespace of your cluster. Like this

NAME READY STATUS RESTARTS AGE

hello-world 0/1 ContainerCreating 0 4s

As you can see, it is currently creating my container, which could take a little while if the image is not available in your cluster yet. But after a little while, you should see something like this

NAME READY STATUS RESTARTS AGE

hello-world 1/1 Running 0 43s

Next we need to try to communicate with our pod to see the fantastic greeting. However, the problem now is that the pod/container is running inside Kubernetes, which is a completely separate network.

Well…actually, it is not really running inside Kubernetes. It’s running inside your Docker environment. Kubernetes has just asked Docker to run the container for us. You can even go ahead and run

C:\> docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

5c85447abd13 zerokoll/helloworld "dotnet HelloWorld.d…" 3 minutes ago Up 3 minutes k8s_helloworld_hello-world_default_4ed93c97-1287-11ea-a46c-00155d0aa703_0

to see your container running in Docker. Kubernetes is just the butler…

Anyhow…to be able to talk to the container, we need a way to forward a port from our local machine to the container that was just created. Luckily, kubectl has this functionality built in. So all you need to do, is to call

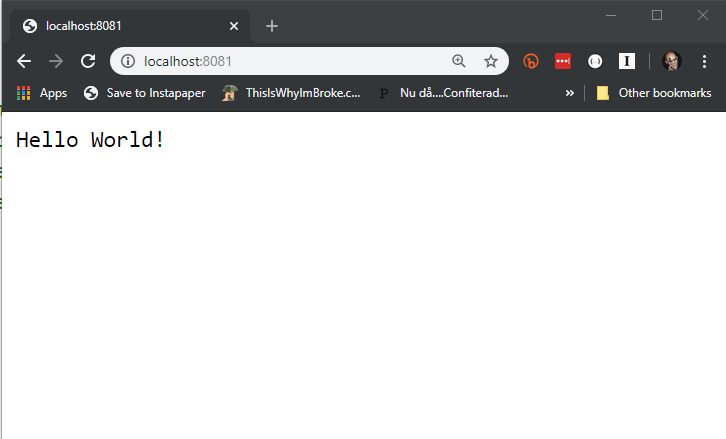

kubectl port-forward hello-world 8081:80

This will return Forwarding from 127.0.0.1:8081 -> 80 which is telling us that the port forwarding is working. This means that any calls to localhost:8081 will be forwarded to port 80 on the container inside Kubernetes as long as the kubectl command is running.

To see the awesomeness that is the zerokoll/helloworld image in action, just open a browser and browse to localhost:8081, and you should see a “Hello World” greeting pop-up in the browser.

There you have it! Your first pod running in Kubernetes!

Note: This will lock the kubectl command in the terminal, and as long as the command is running, it will forward stuff… As soon as it dies, the port forwarding stops.

The last thing to do, is to clean up the stuff that we have just done… Stop the port-forwarding byt pressing Ctrl+C. Then we can delete the pod by either calling

kubectl delete -f ./helloworld-pod.yaml

Or by calling

kubectl delete pod/hello-world

The latter is ok when you are dealing with a single pod like this, but otherwise the file based approach is often easier as it allows for the deletion of all resources defined in a file at once. Basically resetting anything you created when calling kubectl create -f.

Ok, now K8s has forgotten all about the pod that we were running, and you are back to a clean slate! Fairly easy, right?

Note: I should probably mention that Kubernetes is very often shortened to K8s, which comes from “K, then 8 letters, and then s”. It might be obvious, but I thought I would at least mention it…

That’s it! Part one of my introduction to Kubernetes! I hope you enjoyed it!

The second part of this series is available here.