Spinning up Azure Container Instances using the Docker CLI

The Docker CLI has recently added support for running Docker containers using cloud providers. More specifically using Azure Container Instances and Amazon ECS. This seems like a really cool addition to the CLI, so obviously it has to be tried out and blogged about!

Setting up a context

The first thing you need to do, is to set up something called a context. A context is basically a predefined configuration that defines what service provider you want to use, and the configuration needed for that provider. But before we can do that, we need to log in to our cloud provider, in my case Azure. This is done by executing the following command

docker login azure

This will open a web browser where we can add our credentials and sign in.

Note: If your account is associated with more than one tenant, you can specify the –tenant-id parameter to define which of the tenants you want to use

If you want to use a service principal instead of logging in with your own account, you can use the following command instead

docker login azure --client-id <CLIENT ID> --client-secret <CLIENT SECRET> --tenant-id <TENANT ID>

This retrieves a token that the Docker CLi can use to access Azure. However, it is only valid for a short period of time. An hour-ish I think. And it isn’t auto refreshed, so you need to re-authenticate ever so often if you are spending more than that time working with this.

Once you have an access token, you need to create the context that I mentioned before. In the case of Azure, you do that by executing a command that looks something like this

docker context create aci <CONTEXT NAME>

This starts an interactive flow that will set up a context with the defined name. An interactive flow will then let you decide what subscription and resource group the context should be using.

Note: If you decide to create a new resource group instead of using an existing one, a resource group will be created for you automatically. However, the name will just be an auto generated GUID, which I personally don’t find that awesome to be honest…

If you don’t like the interactive CLI version, you can pass in the required configuration using command line parameters like this instead

docker context create aci <CONTEXT NAME> --subscription-id <SUBSCRIPTION ID> --resource-group <RG NAME> --location <LOCATION>

In my case, I want to have a new resource group, but I don’t want a GUID for a name. So I’ll use the Azure CLI to a new resource group called aci-demo, and then use that group in a new ACI context called demo-context. Like this

az group create -n aci-demo -l westeurope

docker context create aci demo-context --subscription-id XXYYZZ --resource-group aci-demo --location westeurope

Once I have my context up and running, I can have a look at it using the Docker client, by running

> docker context ls

NAME TYPE DESCRIPTION DOCKER ENDPOINT KUBERNETES ENDPOINT ORCHESTRATOR

default * moby Current DOCKER_HOST based configuration npipe:////./pipe/docker_engine https://kubernetes.docker.internal:6443 (default) swarm

demo-context aci aci-demo@westeurope

As you can see, I now have a default context that is set up to use my local WSL-based Docker service, as well as one called demo-context, that is set up to use ACI.

I can also use docker context inspect demo-context to get a deeper look into the configuration for the context

> docker context inspect demo-context

[

{

"Name": "demo-context",

"Metadata": {

"Description": "aci-demo@westeurope",

"Type": "aci"

},

"Endpoints": {

"aci": {

"Location": "westeurope",

"ResourceGroup": "aci-demo",

"SubscriptionID": "XXYYZZ"

},

"docker": {

"SkipTLSVerify": false

}

},

"TLSMaterial": {},

"Storage": {

"MetadataPath": "C:\\Users\\Chris\\.docker\\contexts\\meta\\db7f62b11fbda8ad2759dff691ff9ab0ea11bc248aab479f51f1cdd8f517c6f0",

"TLSPath": "C:\\Users\\Chris\\.docker\\contexts\\tls\\db7f62b11fbda8ad2759dff691ff9ab0ea11bc248aab479f51f1cdd8f517c6f0"

}

}

]

Now that I have a context set up, I can go ahead and run a container in Azure using just the Docker CLI.

Running a container in Azure Container Instances

Let’s go ahead and try to run a simple nginx demo container in ACI

> docker run --context demo-context -p 80:80 nginxdemos/hello

[+] Running 2/2

- Group interesting-chaum Created 5.0s

- interesting-chaum Done 20.6s

This starts up a new nginx demo container. The container gets an auto generated name as usual, since I didn’t provide a custom name. In this case, it ended up being called interesting-chaum.

However, it doesn’t set up a DNS entry for it. So to access it, we need to get hold of the containers public IP address. This can be done in a couple of different ways. First of all, you can obviously go to the portal and look at the container instance. But now that we have the terminal up and running already, we might as well use it. And you have at least 2 options for getting the IP through the terminal. The first one is to run the following Azure CLI command

> az container show --resource-group aci-demo --name interesting-chaum --query 'ipAddress.ip'

"52.143.9.242"

and the second one is to use the Docker ClI like this

> docker --context demo-context ps

CONTAINER ID IMAGE COMMAND STATUS PORTS

interesting-chaum nginxdemos/hello Running 52.143.9.242:80->80/tcp

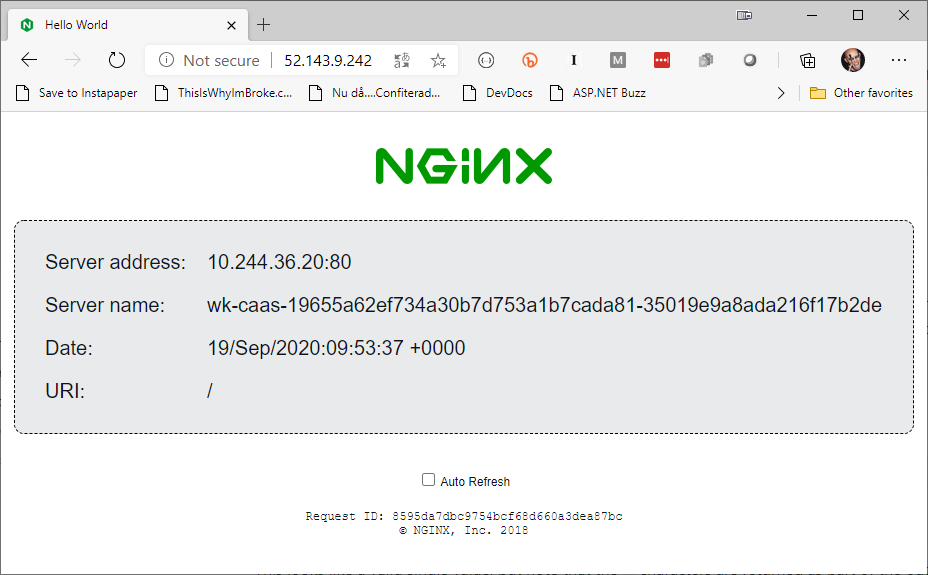

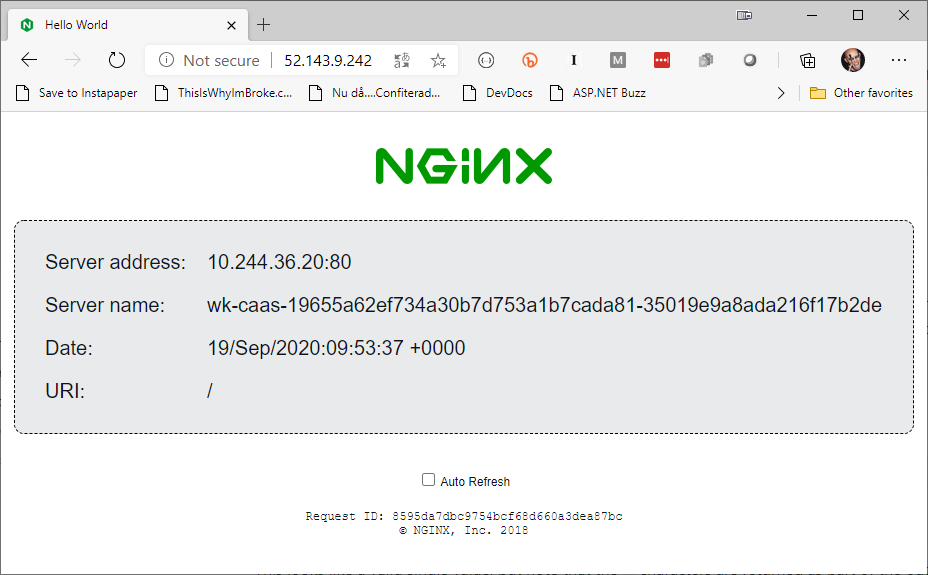

Either way, once we have the IP address, we can jump over to our browser of choice and browse to the IP address.

There it is! A simple nginx container running in ACI using just the Docker CLI.

Well…“just” the Docker CLI… I did use the Azure CLI to get the resource group up and running, but the actual container stuff was pure Docker CLI!

And as this is just like any other container running in Docker, you can do most of the things you would normally do with a Docker containers. For example, have a look at its logs by running

> docker --context demo-context logs interesting-chaum

10.240.255.56 - - [19/Sep/2020:09:53:37 +0000] "GET / HTTP/1.1" 200 7284 "-" "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/85.0.4183.102 Safari/537.36 Edg/85.0.564.51" "-"

10.240.255.56 - - [19/Sep/2020:10:01:12 +0000] "GET / HTTP/1.1" 200 7284 "-" "HTTP Banner Detection (https://security.ipip.net)" "-"

or even exec into it

> docker --context demo-context exec -it interesting-chaum sh

> ls

bin etc lib mnt root sbin sys usr

dev home media proc run srv tmp var

> exit

Just as if it was running on my local machine.

Note: If you are working with a specific context for a while and not just a single command or two, you can set the context as the default by running docker context use <CONTEXT NAME>. That way you don’t need to add the --context flag all the time. Just remember to to change it back when you are done.

And when I’m done with my container, I can just remove it by running

> docker --context demo-context stop interesting-chaum

> docker --context demo-context rm interesting-chaum

or just

docker --context demo-context rm -f interesting-chaum

Azure file shares as Docker volumes

Another cool feature is that you can use an Azure file share as a volume in Docker. And they are added to the container using the -v parameter like any other volume. Like this

docker run --context demo-context -v <STORAGE ACCOUNT NAME>/<FILESHARE NAME>:/<TARGET PATH> nginxdemos/hello

To demo this feature, I’m going to add a simple HTML page to an Azure file share, and then going to map that file share as a volume in an ACI container running nginx, making nginx serve that page on the web.

The first thing I need is a storage account and a file share. I could create these using the Azure CLI as usual, but for this demo I’m actually going to use the Docker CLI to create them as well. Mostly because it is actually easier than using the Azure CLI. All you need to do, is run a command that looks like this

docker --context <CONTEXT NAME> volume create --storage-account <ACCOUNT NAME> --fileshare <FILE SHARE NAME>

For this demo, that means

docker --context demo-context volume create --storage-account acidemostorage --fileshare myfileshare

This will create a new storage account inside the resource group defined by the Docker context, and then add a file share inside that storage account. Just what I need.

Next, I need to add the HTML page, which looks like this

<!DOCTYPE html>

<head>

<meta charset="UTF-8">

<title>Docker ACI Demo</title>

</head>

<body>

<h1>Docker ACI Demo</h1>

</body>

</html>

Adding the file to the file share can be done in multiple ways. Normally when using Docker, I would spin up a temporary container with the volume mapped, and then use docker cp to copy the file. Unfortunately, docker cp doesn’t seem to work when working with ACI containers. So because of this, I’ll just have to upload the files to the file share using the Azure CLI instead. Luckily, that is a pretty simple task.

The command for it looks like this

az storage file upload --account-key <STORAGE ACCOUNT KEY> --account-name <ACCOUNT NAME> --share-name <FILE SHARE NAME> --source <PATH TO FILE>`

So I’ll go ahead and run

az storage file upload --account-key <SECRET> --account-name acidemostorage --share-name myfileshare --source ./index.html

Now that the HTML file has been uploaded to the file share, I can start my nginx server container and map my volume inside it like this

docker run --context demo-context -d -p 80:80 -v acidemostorage/myfileshare:/usr/share/nginx/html nginx

Note: By default, the nginx image will serve any file that is located in the /usr/share/nginx/html directory. And it will use any file named index.html as the default file that is to be served at /. So by mapping my volume to that path, with the index.html file in the root of the file share, it should be served automatically.

Once the container is up and running, I can get hold of the IP address using docker --context demo-context ps, and browse to the IP address using my favorite browser

And there it is! My simple HTML page is served using nginx inside a Docker container running in Azure Container Instances, with the page coming from a file share using Docker volume mapping. That’s pretty cool!

Once I’m done looking at this marvel of technology, I can simply remove the container by running docker --context demo-context rm -f <CONTAINER NAME>.

Docker Compose

You can also run Docker Compose-based solutions using ACI, just like you would on your local machine. Just set the context, or use the --context flag, to define what context you want to run your containers in, and you are good to go.

To run the HTML demo above using a Docker Compose YAML file instead of just Docker commands, I can create a docker-compose.yml file that looks like this

version: '3'

services:

web:

image: nginx

ports:

- "80:80"

volumes:

- myfileshare:/usr/share/nginx/html

volumes:

myfileshare:

driver: azure_file

driver_opts:

share_name: myfileshare

storage_account_name: acidemostorage

Once I have my docker-compose.yml file, I can start my containers by executing

docker --context <CONTEXT> compose up

Once the docker compose command has finished, we can once again get hold of the IP address and browse to the webpage.

However, since the webpage looks exactly the same as in the previous demo, there is no real reason to show a screen shot of it…

And as soon as you are done playing with your compose-based containers, you can just remove them by running

docker --context <CONTEXT> compose down

There you have it! Running Docker containers in ACI using the Docker CLI is a piece of cake, but can be really useful. So, why not go and have a play with it and see if you can break it!