My intro to Docker - Part 5 of 5

In the previous posts, I have talked about everything from what Docker is, to how you can set up a stack of containers using docker-compose, but all of the posts have been about setting up containers on a single machine. Something that can be really useful, but imagine being able to deploy your containers just as easily to a cluster of machines. That would be awesome!

With Docker Swarm, this is exactly what you can do. You can set up a cluster of Docker hosts, and deploy your containers to them in much the same way that you would deploy to a single machine. How cool is that!

Creating a cluster, or swarm

To be able to try out what it’s like working with a cluster of machines, we need a cluster of machines. and by default, Docker for Windows/Mac includes only a single Docker host when it’s installed. However, it also comes with a tool called docker-machine that can be used to create more hosts very easily.

There is just one thing we need to do before we can create our cluster of hosts. We need to set up a virtual network switch for them to attach to. And to do that, I’m going to use the Hyper-V manager.

Remember that our hosts run in Hyper-V on Windows, so we are dependent on that infrastructure for it to work. On a Mac, the situation is different. So if you are doing this on a Mac, I suggest Googling how to create a virtual switch on a Mac.

In the Hyper-V Manager, right-click the local machine in the menu to the left

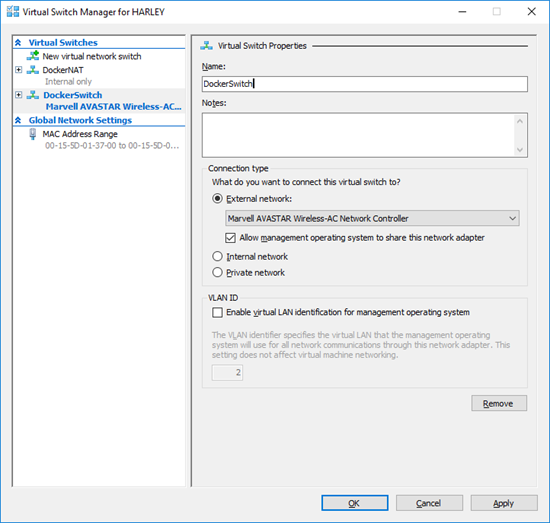

and then click Virtual Switch Manager…, followed by Create Virtual Switch. Name the switch something useful, and connect it to an external network of your choice. You might more than one network adapter on your machine. In that case, choose the one that you want the Docker hosts to be connected to.

Personally I named it DockerSwitch, and attached it to my wireless adapter on my laptop

Note: One thing to note about this, is that attaching the hosts to a public network adapter like this will give the hosts new IP addresses every time you switch network. This will in turn screw up you cluster. But it works well as long as you are just quickly trying this out.

With that switch in place, we can go ahead and create a couple of Docker host machines using the docker-machine tool. A process that is almost too easy to believe. All you have to do, is call docker-machine, passing it the virtualization platform that is being used, the name of the virtual switch to use, and the name to use for the machine. So I’ll go ahead and execute the following

docker-machine create -d hyperv --hyperv-virtual-switch DockerSwitch SwarmManager

using a Powershell window running as an Administrator. This will set up a new Hyper-V based virtual machine using a Boot2Docker ISO, naming it SwarmManager and attaching it to the DockerSwitch virtual switch.

This machine will be the manager of my cluster. That means that it will be the machine that I will execute my Docker commands against. It then makes sure to set up the state in the swarm for us. In the case of starting some new containers, it means that it will either host the container itself, or delegate to a worker in the cluster, depending on load and requirements and so on.

You don’t really have to set up any workers to be able to run the swarm commands that we are going to be running in this post. A one machine cluster is perfectly fine. But it is a little boring. So I’ll go ahead and create one more machine that will acts as a worker, by running the following command

docker-machine create -d hyperv --hyperv-virtual-switch DockerSwitch SwarmWorker01

This will create a second VM called SwarmWorker01, which I’ll set up to be a worker in the swarm.

Next, I need to create the actual swarm. And to do that, I need to run a command that looks like this

docker swarm init

on the manager node. However, it needs to run on the actual host. So to do that, I’ll use docker-machine to SSH into the machine and execute the command. Like this

docker-machine ssh SwarmManager "docker swarm init"

This tells docker-machine to SSH into SwarmManager, and execute docker swarm init. This will return some information about how to join the swarm from a worker. It should look similar to this

To add a worker to this swarm, run the following command:

docker swarm join --token SWMTKN-1-0poaqqcljbote3vg8zpl7g68ehrcr3xsqpbth1ckqxnqip6vyd-5vsl5hjgmplhk98lnc9n7og70 192.168.43.16:2377

To add a manager to this swarm, run docker swarm join-token manager and follow the instructions.

which is pretty descriptive. So let’s go ahead and use docker-machine to execute that on the swarm node by running

docker-machine ssh SwarmWorker01 "docker swarm join --token SWMTKN-1-0poaqqcljbote3vg8zpl7g68ehrcr3xsqpbth1ckqxnqip6vyd-5vsl5hjgmplhk98lnc9n7og70 192.168.43.16:2377"

This should return a simple statement telling you that the node has been joined to the swarm as a worker

These simple commands have now given us a tiny swarm, containing a manager and a single worker node, and it’s now ready for us to go ahead and deploy our containers.

Note: If you want to reconfigure your Docker client to target the SwarmManager host instead of using SSH, you can do that by running docker-machine.exe env SwarmManager | Invoke-Expression. This will set the environment variables needed to reconfigure the Docker client to talk to the SwarmManager instead of the default Docker for Windows Docker host. This is temporary though, and will only affect the current PowerShell window.

Adding images to use in the swarm

The next step is to set up some images that we can use to for our containers. But this time this is going to be a little different. Because, to be able to share images between nodes in the swarm, the images need to be on an external repository. And for this, I’m going to use Docker Hub. But before we get to that, we need an application to deploy. And in this case, it will be a simple ASP.NET Core web application. It’s VERY simple. It uses a startup file that looks like this

public class Startup

{

public void Configure(IApplicationBuilder app, IHostingEnvironment env)

{

app.Run(async (context) => {

await context.Response.WriteAsync("Hello World!");

})

}

}

As you can see, it is just a VERY basic application that returns “Hello World” on every incoming request.

And the dockerfile for this application, which is located in the same folder as the application, looks like this

FROM microsoft/aspnetcore-build

COPY . /app

WORKDIR /app

EXPOSE 80

ENTRYPOINT ["dotnet", "run"]

Once I have my application and my dockerfile, I need to build an image by running docker build. So I’ll go ahead and execute

docker build . --tag zerokoll/demo_app

This will build an image called zerokoll/demo_app locally on my machine. The zerokoll part is my Docker ID. It needs to be named like this to allow me to push it to Docker Hub.

Next I need to push it to Docker Hub, but to do that, I first need to log in by executing

docker login

This requires me to pass in my Docker ID and password.

Once that is done, I can push my demo_app image to Docker Hub by executing

docker push zerokoll/demo_app

This will push the entire image to my repo at Docker Hub, making available to all my swarm nodes.

With that in place, we can go ahead and start a new container in the cluster.

Starting containers in the swarm

However, before we do that, I just want to mention that it is actually called starting a service in the cluster. A service is basically a container running in a swarm, but a service supports some other features like being able to run multiple, load-balanced instances across swarm nodes. So to be correct, we should be talking about setting up some services.

To get services up and running in the swarm, we need to tell the manager what services we want. We can do this in a couple of ways. We can either run commands like docker service create manually, or we do it using using docker-compose.yml files. And since we already know how to use these, let’s go ahead and use a docker-compose file.

I’ll create a new docker-compose.yml file that starts a container using the image we just created. It should look something like this

version: '3'

services:

demo_app:

image: zerokoll/demo_app

ports:

- "8080:80"

This will start a new service called demo_app, based on the zerokoll/demo_app image, binding the hosts port 8080 to the containers port 80. However, to “deploy” it to the cluster, we don’t use docker-compose command as we have done before. Instead we use docker stack deploy. And we need to run it on the manager, not our “local” host, which means that we need to copy the yml file to the manager machine, and then call the command on that machine using SSH.

So the first step is to copy the file to the manager using the following command

docker-machine scp docker-compose.yml SwarmManager:~

This will copy the docker-compose.yml file from the local folder to the manager. And then we need to deploy it to the swarm using the following command

docker-machine ssh SwarmManager "docker stack deploy -c docker-compose.yml demo_stack"

This will deploy the “stack” of services defined in the docker-compose file to the swarm.

However, this will take a little while, as the manager first needs to download the image before it can start the service. “Unfortunately” this happens in the background, so it is a bit hard to figure out what is happening. But as soon as is is done , we should be able to browse to our beautiful “Hello World” text by browsing to the swarm manager. And to be able to do that, we need to know the swarm managers IP address. Something that we can find out by running

docker-machine ls

This should give you a list of Docker machines running on you computer, including their IP addresses. So all you need to do to verify that everything is working, is to copy the IP address of the SwarmManager machine and put it into your browsers address bar, appending :8080.

If you want to see what services are running in the swarm, you can run

docker-machine ssh SwarmManager "docker service ls"

This will show you all services running in the swarm. And as you can see, the demo_app service has been named by prefixing it with the stack name demo_stack. If you instead just want to see the services running in the stack called demo_stack, you can run

docker-machine ssh SwarmManager "docker stack ps demo_stack"

And once you have verified that everything is working, and you are ready to tear down your “stack”. You can just execute

docker-machine ssh SwarmManager "docker stack rm demo_stack"

This will stop all the services that are currently running in the stack, and remove them, including the containers.

But wouldn’t it be cool if we could see what services are running on what node? Guess what, you can easily do that by adding an extra service to the mix.

Visualizing the containers in the swarm

The only thing we need to do to add some visualization to the, is to add another service/container to the mix. And to do that, we just need to update the docker-compose.yml file like this

version: '3'

services:

demo_app:

image: zerokoll/demo_app

ports:

- "8080:80"

networks:

- my_network

visualizer:

image: dockersamples/visualizer:stable

ports:

- "8081:8080"

volumes:

- "/var/run/docker.sock:/var/run/docker.sock"

deploy:

placement:

constraints: [node.role == manager]

networks:

- my_network

networks:

my_network:

As you can see, I have made a bunch of changes to the docker-compose.yml file. First of all, I have added a new service called visualizer, which is based on the image dockersamples/visualizer. This is a cool little service that gives us a website where we can see the state of the swarm.

For the visualizer service, I have mapped port 8081 on the swarm to the containers port 8080, as well as set up a volume based on the docker unix socket. However, I have also set up a placement constraint, telling the manager that this service has to run on a swarm node, where the nodes role is manager. The reason for this is that this service depends on information provided by the manager node. So adding this constraint will make sure that whenever this stack is deployed, this service will always be placed on the manager node.

Next, I have just made some things a but more explicit. Mainly the networking. In this case, I am explicitly setting up a network called my_network, and adding both the services to that network. If we don’t explicitly tell the manager to set up a network, it will set up a default network, and add all the services to it, which is what happened in the previous version of the file.

So let’s go ahead and run

docker-machine scp docker-compose.yml SwarmManager:~

docker-machine ssh SwarmManager "docker stack deploy -c docker-compose.yml demo_stack"

This will copy across the new docker-compose.yml file, and then deploy the stack to the swarm. And once again, that will take some time, as it first needs to get the dockersamples/visualizer image. But once that is done, you should be able to back to the browser and port 8080 and get that familiar “Hello World” response. However, you should now also be able to go to port 8081 to see the visualization of the nodes and services in the swarm. In my case, i can see the following

This This tells me that I have 2 nodes, the SwarmManager and the SwarmWorker01, and the manager runs the demo_stack_visualizer, and the worker runs just an instance of the demo_app. The little green dots in the top left corner of the “service boxes” are also telling me that the instances are up and running.

Note: It took me a minute before I got the screenshot, so the demo_stack_demo_app instance managed to turn green. But it did take a little while to go from red to green as the worker node first needed to pull the image from Docker Hub before it could get it started.

But right now, we are only running a single service besides the visualizer. So it’s going to be a really boring thing to look at. Not to mention that it doesn’t really show any of the cool load balancing features of the swarm. So let’s go ahead and modify the setting for the demo_app service, adding the following

…

services:

demo_app:

…

deploy:

replicas: 2

…

This tells the swarm manager that it should run 2 instances of the demo_app service in a load balanced fashion.

Unfortunately, we will have no clue that the load balancing is taking place at the moment, since the response will be the same from both services. So let’s update the application by adding some information about what host the application is running on. And the easiest way to do this, is by updating the Startup class, changing the response like this

await context.Response.WriteAsync("\"Hello World!\" says " + Environment.MachineName);

Ok, so now that the application will tell us what machine it is running, let’s go ahead and deploy it.

First of all, we need to create a new image, byt running the following command

docker build . --tag zerokoll/demo_app

and then push it to Docker Hub by executing

docker push zerokoll/demo_app

Finally,, we need to deploy the updated stack by executing the following commands

docker-machine scp docker-compose.yml SwarmManager:~

docker-machine ssh SwarmManager "docker stack deploy -c docker-compose.yml demo_stack"

This first copies across the updated docker-compose.yml file, and then deploys it to the swarm, updating the demo_stack stack.

Tip: Pull up the visualizer window before executing the command to let you see what is happening. As you will see, it will take down one service at the time, setting up a new instance before taking down the next.

This time, it only needs to pull down a little bit of data, as most of the layers are common between the 2 versions. So the update should be fast.

Once everything has completed, you should the visualizer should now show you that you have 2 instances, or replicas of the demo_app service running in the swarm. And they should be deployed to different nodes in the swarm. In my case, the visualizer looks like this

Once at least one of the demo_app nodes are green, you should be able to browse to port 8080 and see the host name as part of the greeting. And when both instances are up, refreshing the page might show you the requests being load balanced.

I say may, because on my machine I had to pull up a new incognito browser window to get it to use another node. But I’m not sure that is needed in all cases…

But what if we need to make some changes to the demo_app service? Well, in that case, we can just update the service and re-deploy it. So let’s try that…

First I’ll just update the application in some way. So I’ll go ahead and make a simple change to the response, like this

await context.Response.WriteAsync("\"Guten Tag\" says " + Environment.MachineName);

Making the application greet the user in German instead of English.

Then we need to generate a new image

docker build . --tag zerokoll/demo_app

and push it to Docker Hub

docker push zerokoll/demo_app

and then we can go ahead and update the service. We can do this in 2 ways. We can either push a new stack, which will update the service for us, or we can update the individual service by calling docker service update. However, to be able to use docker service update, we need to know the name of the service we want to update. So let’s first query the running services to see what services we have to choose from

docker-machine ssh SwarmManager "docker service ls"

should return something similar to

ID NAME MODE REPLICAS IMAGE PORTS

6v8ejov604w7 demo_stack_visualizer replicated 1/1 dockersamples/visualizer:stable *:8081->8080/tcp

mp7eeu6tmvx3 demo_stack_demo_app replicated 2/2 demo_app:latest *:8080->80/tcp

As you can see, all service names are prefixed with the name of the stack they are part of. This means that the service we want to update is called demo_stack_demo_app, which in turn means that the command we need to execute to update it is

docker-machine ssh SwarmManager "docker service --image zerokoll/demo_app update demo_stack_demo_app"

This will update the demo_stack_demo_app service to use the latest zerokoll/demo_app image. In practice, this means that one service at the time is torn down, and put back up again, using the latest version of the image.

You should now be able to go back to the browser on port 8080, and refresh, and see the greeting in German. If not, give it a couple of seconds and try again. It can take a little while for the services to be updated, depending on the size of the update.

Tip: If you pull up the visualization on screen before you run the command, you will see the services being updated. Or rather, you will see the services being taken down one by one, and put back up using the new image.

Once you are done refreshing the browser and looking at the updated response coming from the updated services, you can tear down the whole thing by calling

docker-machine ssh SwarmManager "docker stack rm demo_stack"

And if you want to remove the hosts from the swarm as well, you can execute

docker-machine ssh SwarmWorker01 "docker swarm leave"

docker-machine ssh SwarmManager "docker swarm leave --force"

As you can see, you need to provide a –force when taking the manager out of the swarm. The reason for this is that this takes down the entire swarm. Not something you want to do by mistake.

And if you want to remove the hosts from your machine as well, you can just execute

docker-machine rm SwarmManager

docker-machine rm SwarmWorker01

This should pretty much put your machine back to the state it was in before you started. Well…not quite… You also need to remember to go back to the Hyper-V Manager and remove the virtual switch that was added at the beginning of the post.

That was it for this post… Hopefully you now have a basic understanding of how you can set up a simple swarm, and deploy your stacks to it using docker stack.